Research

My research focuses on robot perception, deep learning, sensor-based motion planning, and control systems. What I love most are computer vision, 3D SLAM (Simultaneous Localization And Mapping), and spatial AI for their exciting blend of geometry, state estimation, optimization, deep learning, and a variety of beautiful mathematical tools.

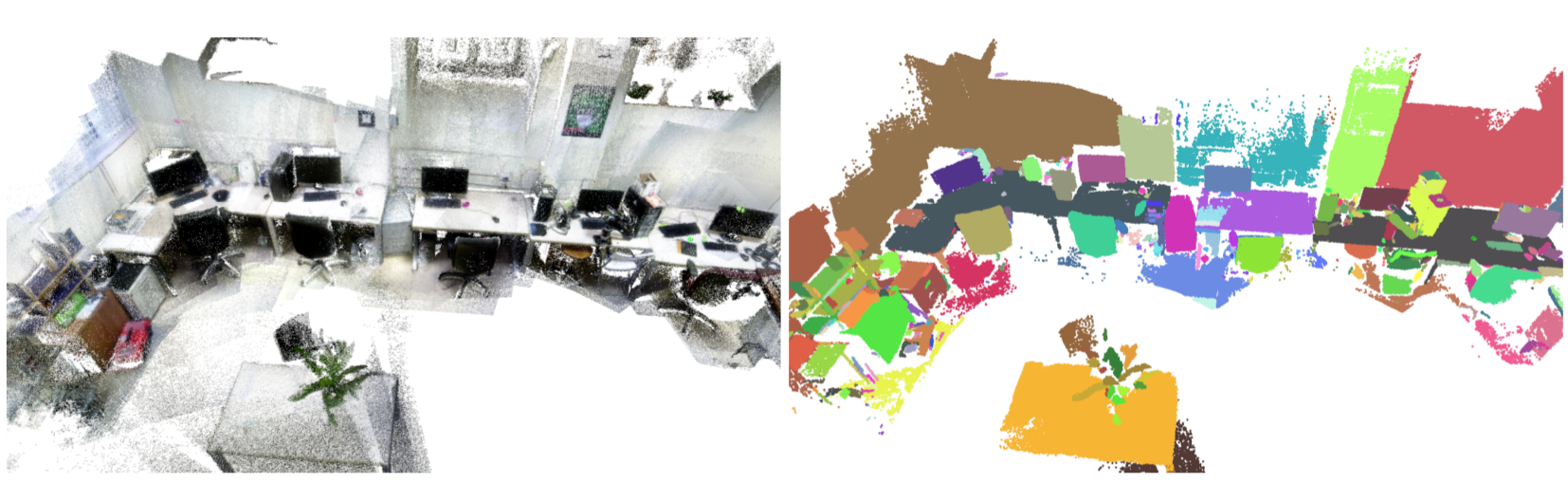

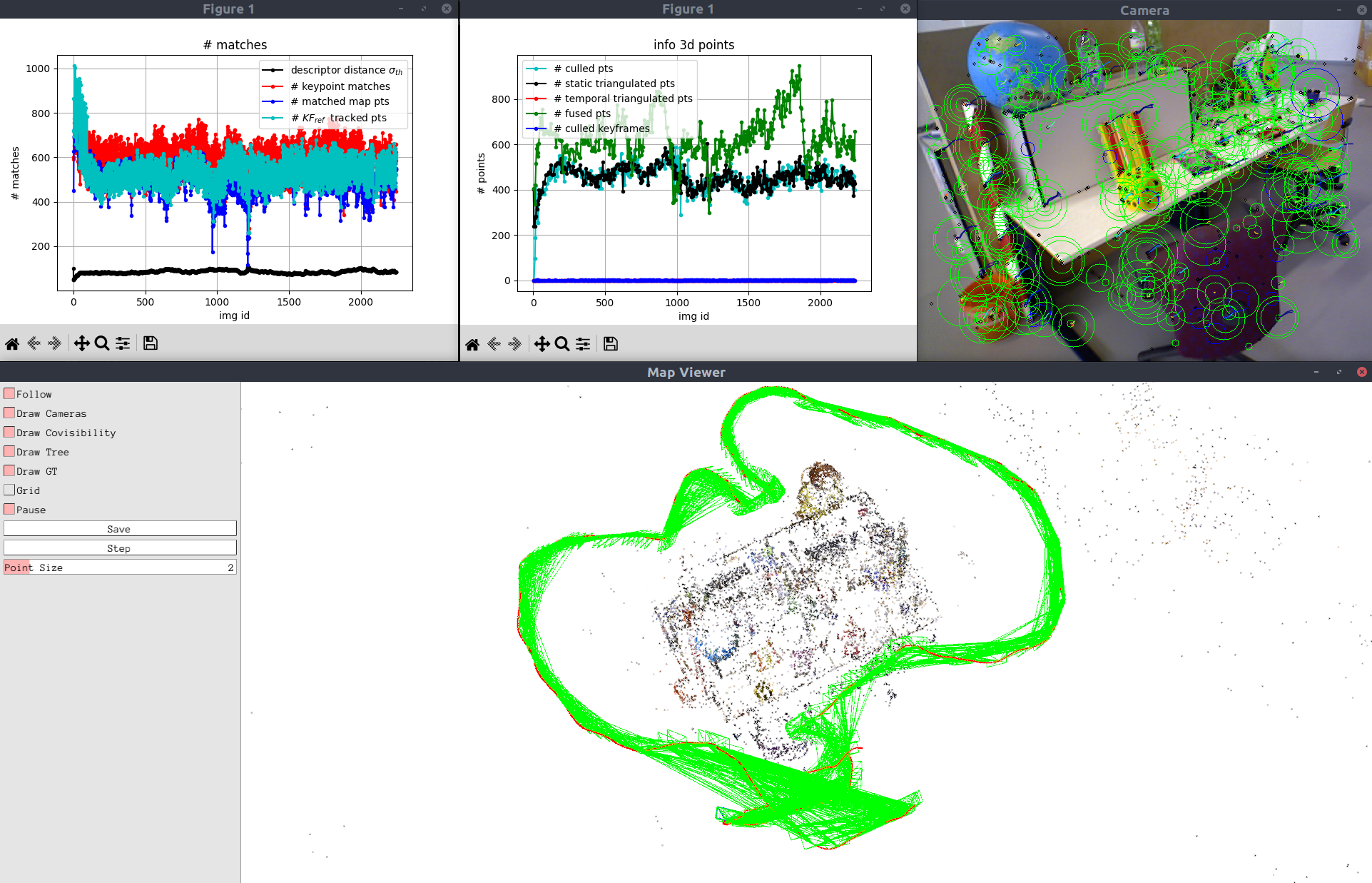

RGB-D and Stereo SLAM: Volumetric reconstruction and 3D incremental segmentation

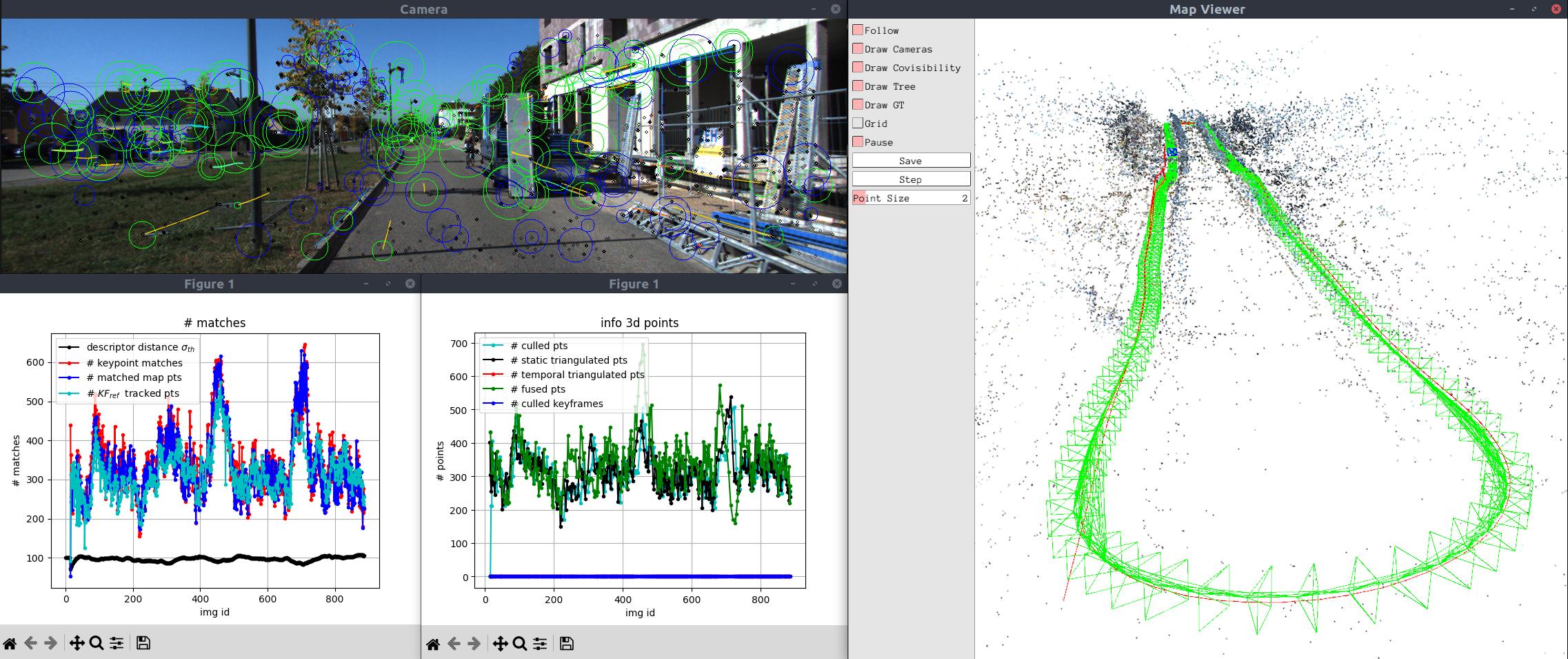

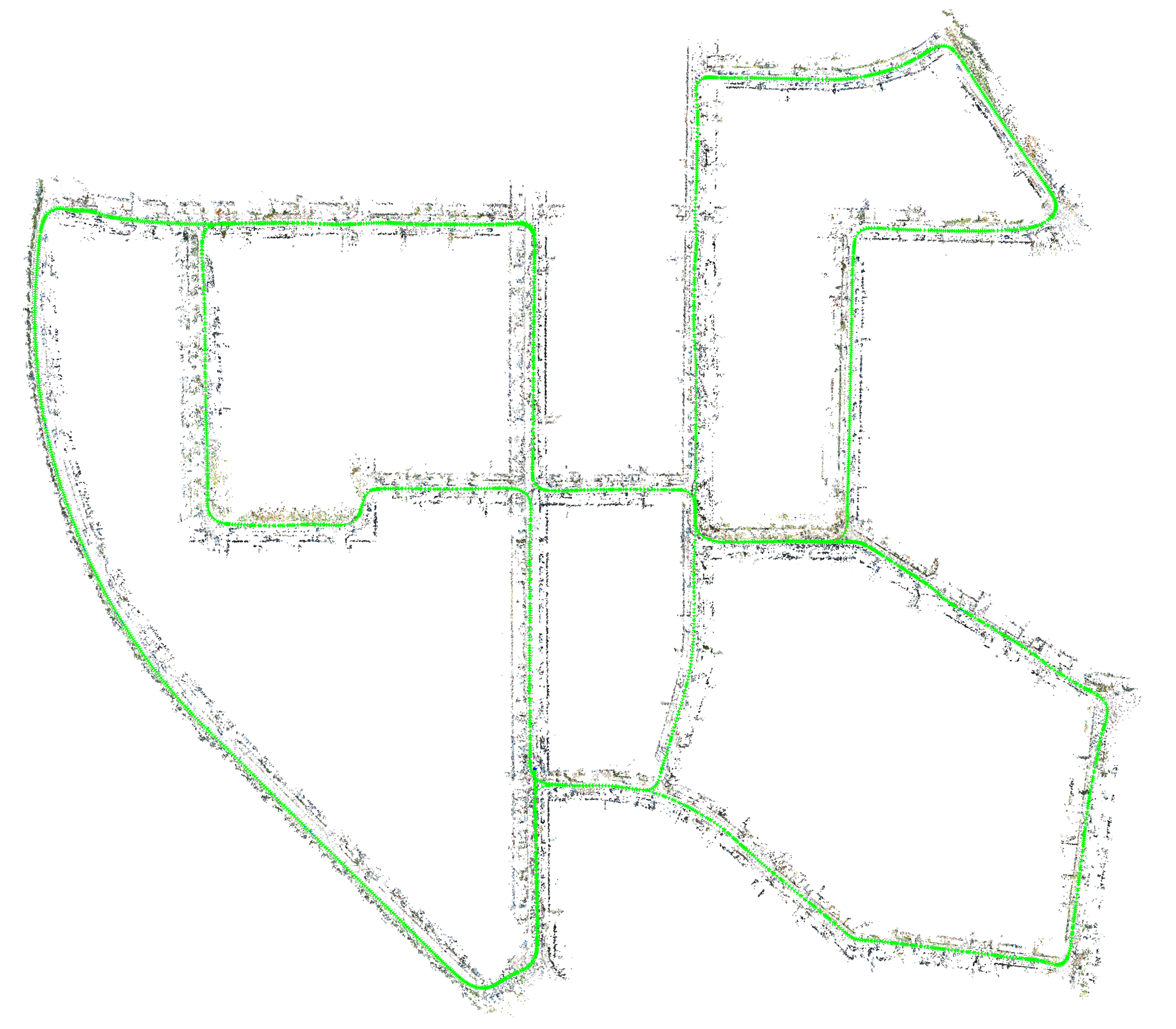

PLVS is a real-time system that leverages sparse RGB-D and Stereo SLAM, volumetric mapping, and 3D unsupervised incremental segmentation. PLVS stands for Points, Lines, Volumetric mapping, and Segmentation. You can find further details on this page.

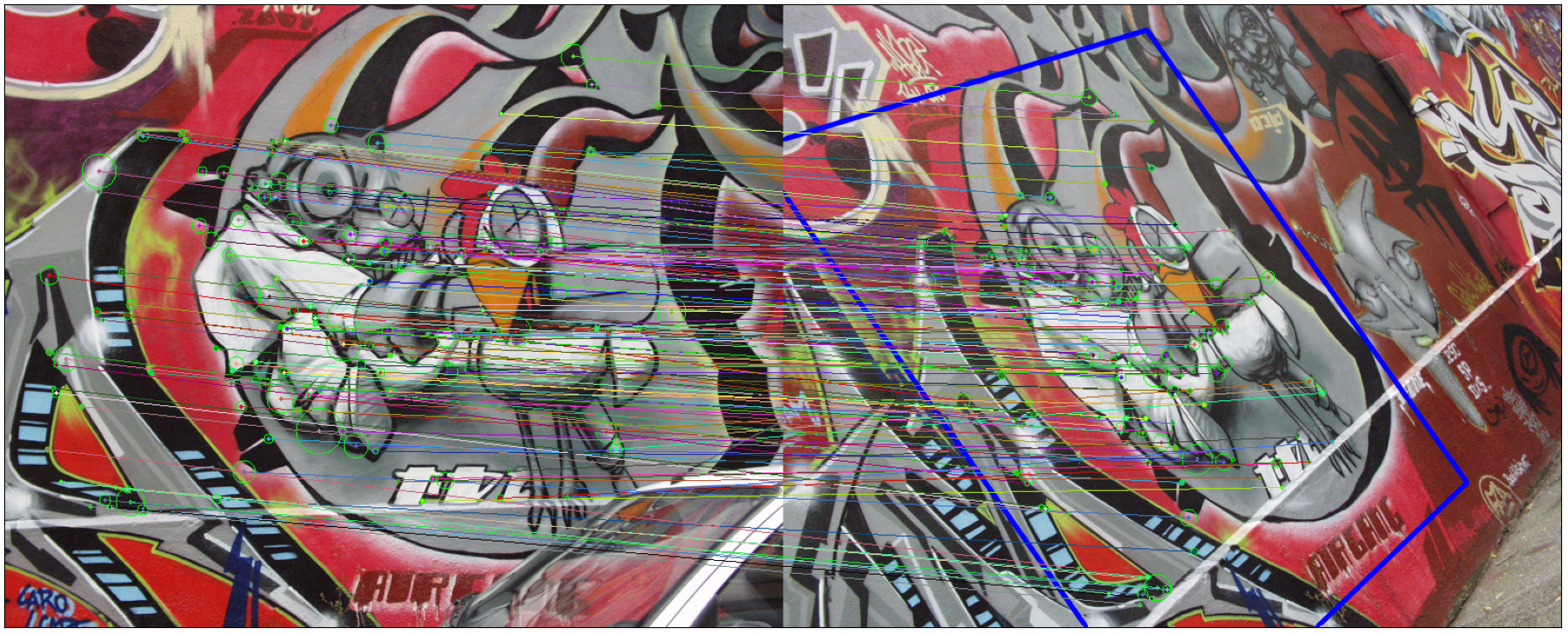

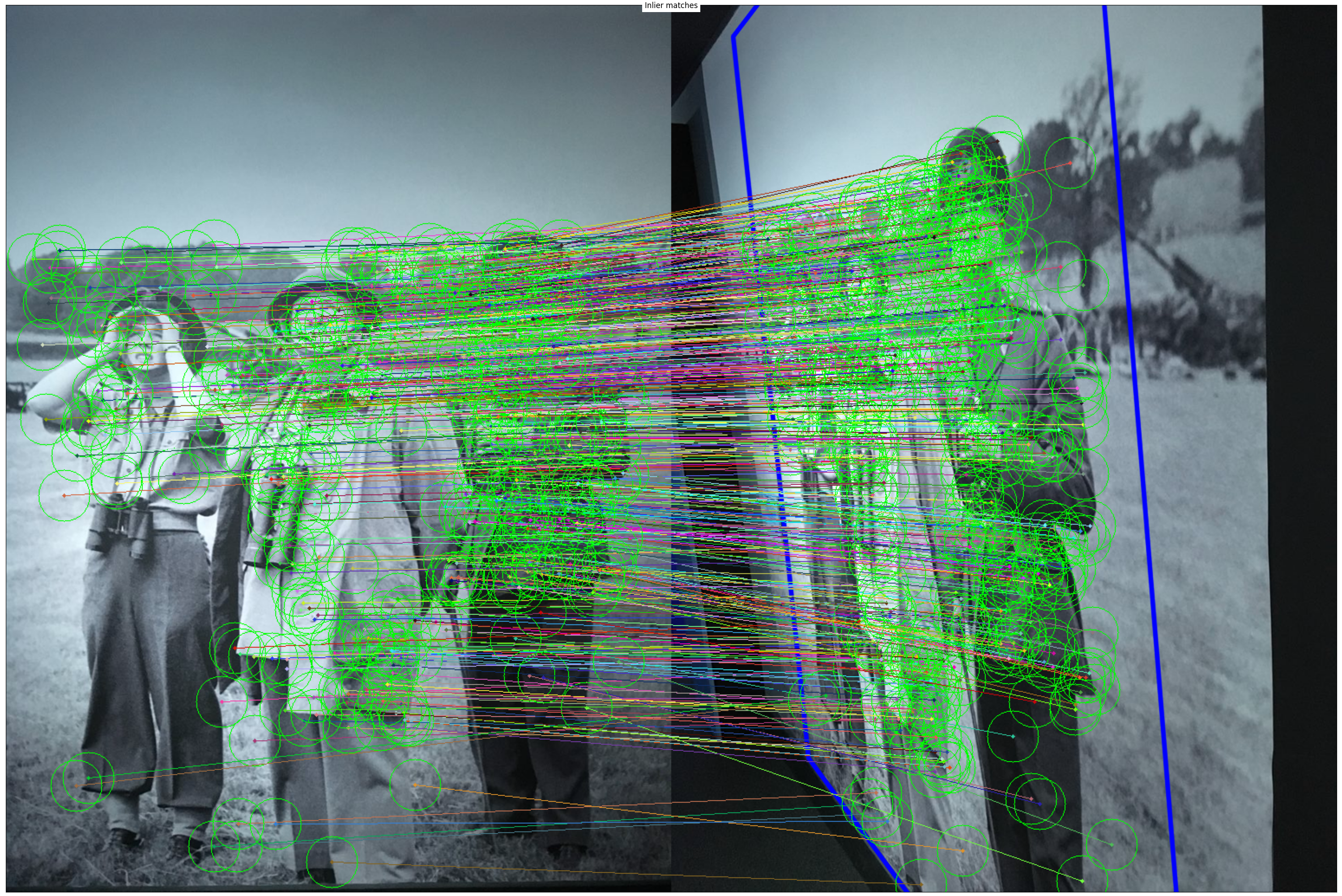

Visual SLAM with modern local and global features

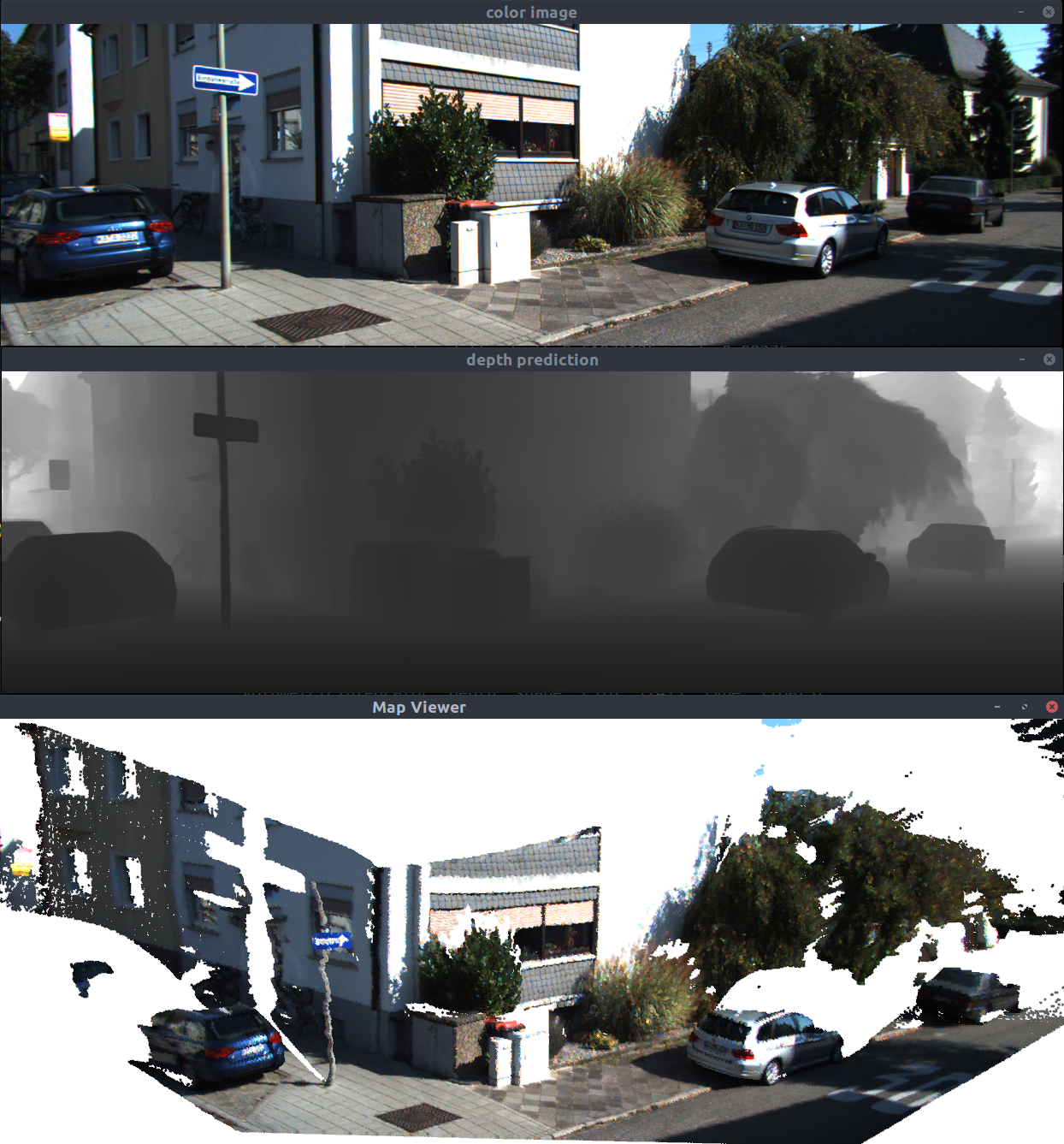

pySLAM is a visual SLAM pipeline in Python for monocular, stereo, and RGBD cameras. It supports many modern local features, global descriptors (based on Deep Learning), descriptor aggregators, different loop-closing methods, volumetric reconstruction, and depth prediction models. All the supported tools are accessible within a single Python environment. See this page for further information.

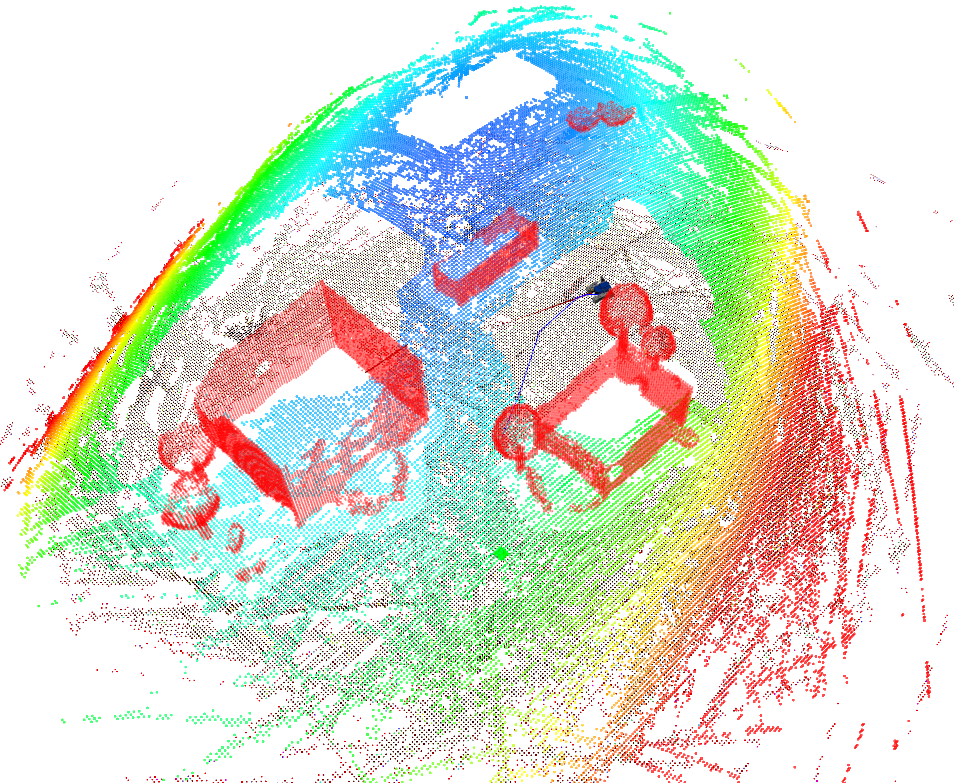

Perception and motion planning for UGVs

• Point cloud segmentation and 3D mapping by using RGBD and LIDAR sensors

• Sensor-based motion planning in challenging scenarios

Learning Algorithms for UGVs

• Online WiFi mapping by using Gaussian processes

• Robot dynamics/kinematics learning and control (algorithms for learning the model of UGVs dynamics/kinematics)

Multi-robot systems

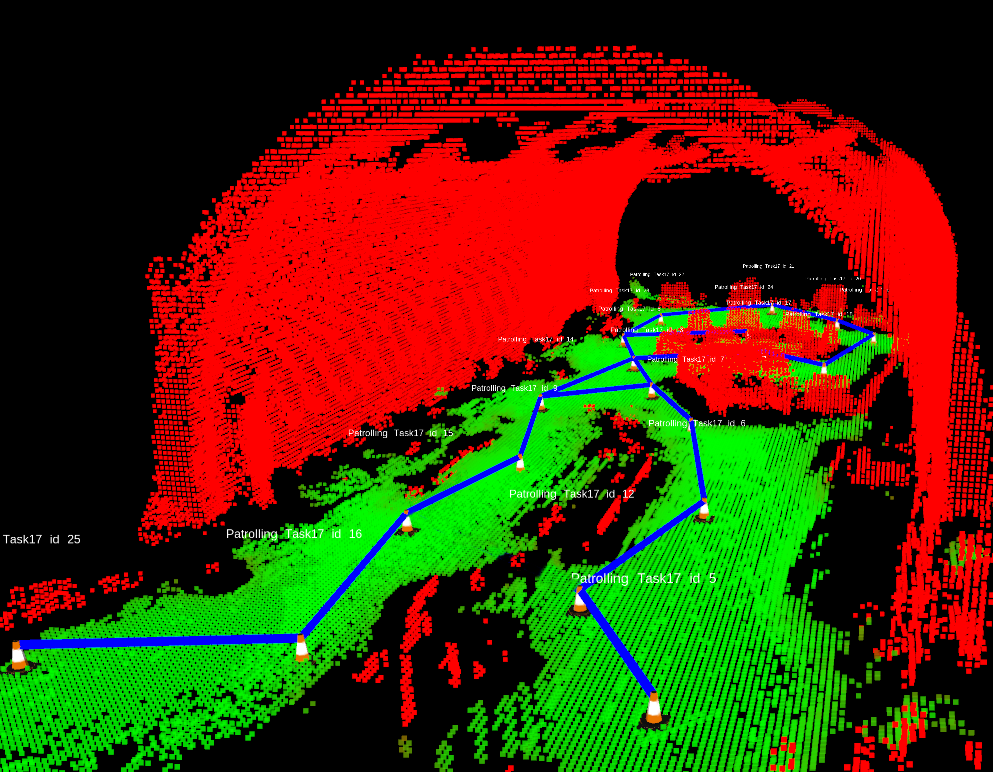

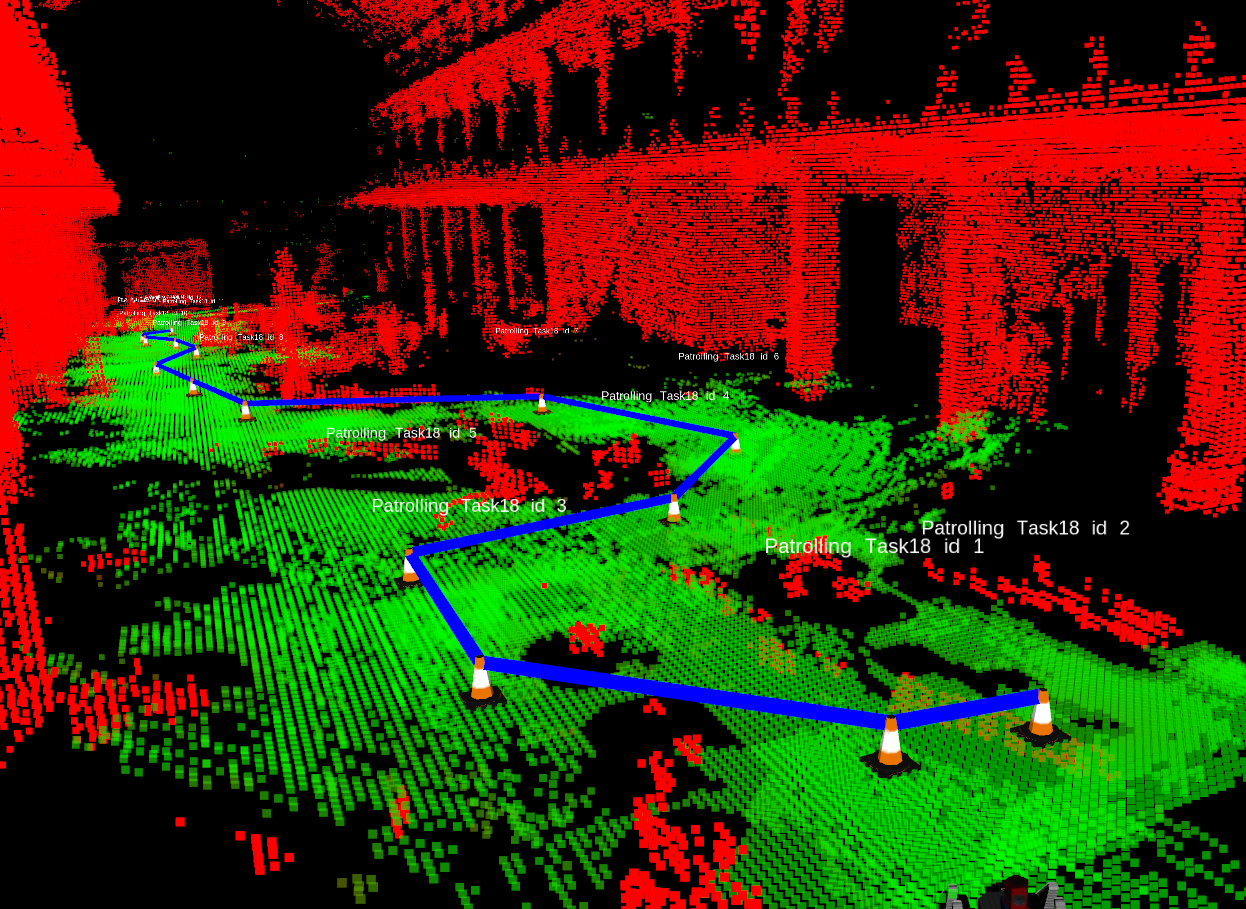

• 3D Patrolling with a team of UGVs

• 3D Exploration with a team of UGVs

• Coverage with a heterogeneous team of robots

• Exploration with mobile robots: the Multi-SRG Method

Computer vision and visual servoing for UAVs

During my experience in Selex ES MUAS (now Leonardo), I designed and developed advanced real-time applications and managed applied research projects for UAVs (CREX-B, ASIOB, SPYBALL-B). In particular, one of these projects focused on near real-time 3D reconstruction from a live video stream. See more on my LinkedIn profile.

Computer vision and visual servoing for mobile robots

• Intercepting a moving object with a mobile robot

• Appearance-based nonholonomic navigation from a database of images

Exploration of unknown environments

• With mobile robots: the SRT Method

• With a multi-robot system: the Multi-SRG Method

• With a general robotic system: the Sensor-based Exploration Tree

Robot navigation and mapping with limited sensing

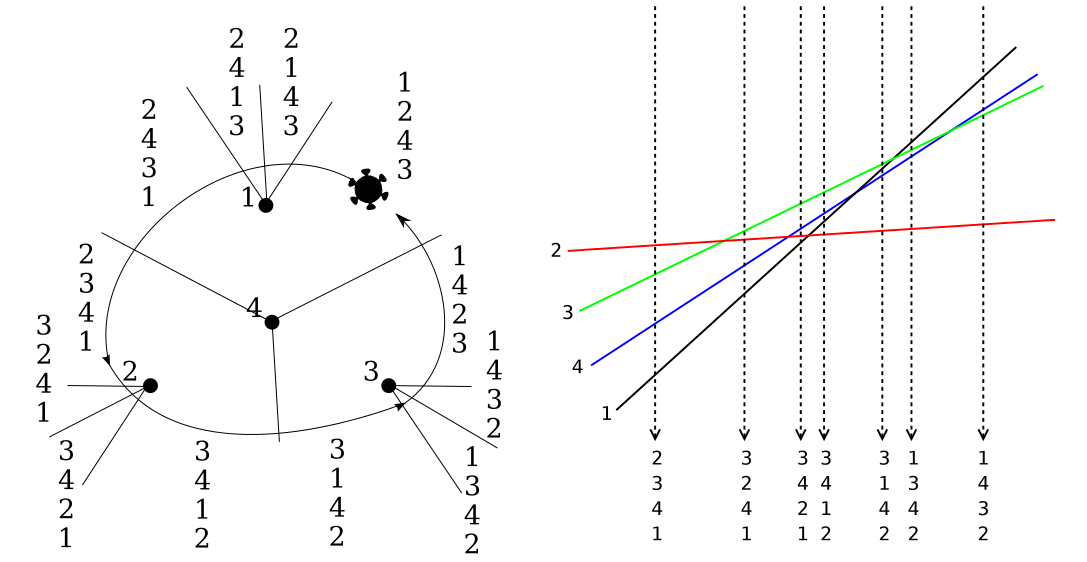

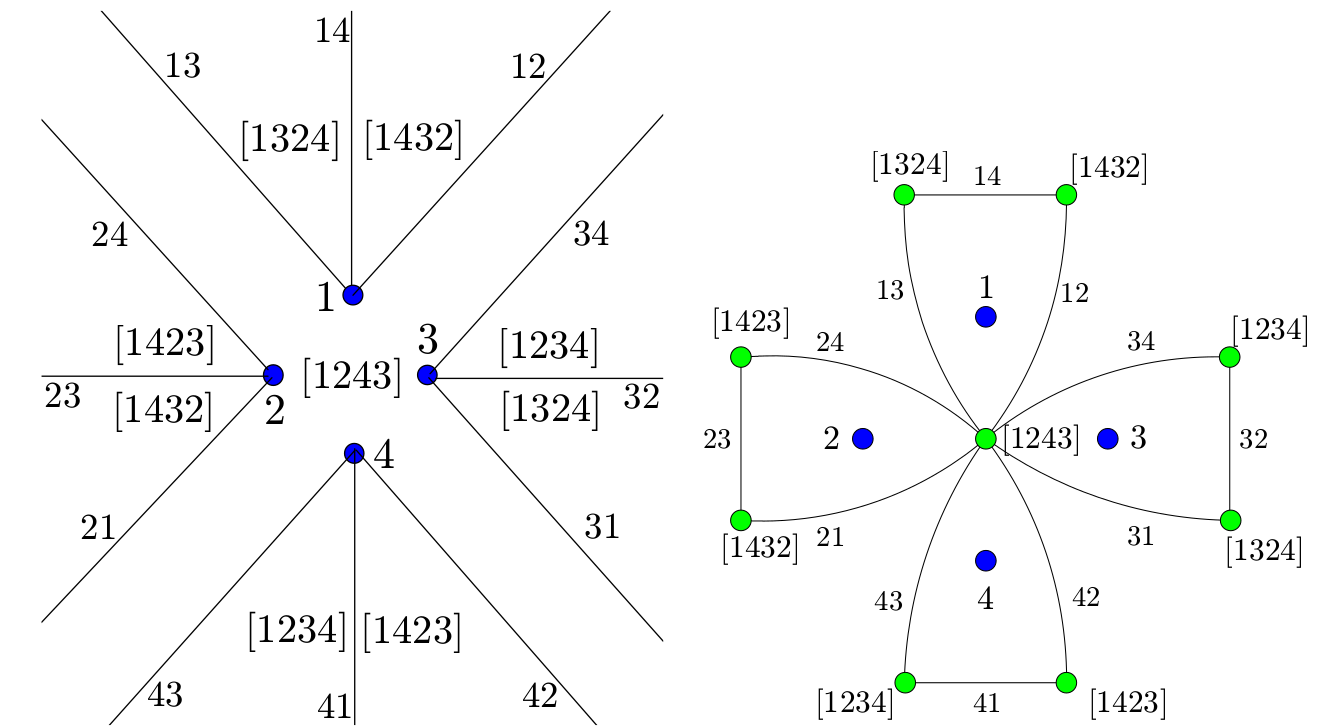

In this work, we characterize the information space of a robot moving in a plane with limited sensing. The robot has a landmark detector, which provides the cyclic order of the landmarks around the robot, and it also has a touch sensor, that indicates when the robot is in contact with the environment boundary. The robot cannot measure any precise distances or angles and does not have an odometer or a compass. We propose to characterize the information space associated with such a robot through the swap cell decomposition. We show how to construct such decomposition through its dual, called the swap graph, using two kinds of feedback motion commands based on the landmarks sensed. See more in these papers

• Learning Combinatorial Map Information from Permutations of Landmarks

• Learning combinatorial information from alignments of landmarks

• Using a Robot to Learn Geometric Information from Permutations of Landmarks

Warning: The material (PDF or multimedia) that can be downloaded from this page may be subject to copyright restrictions. Only personal use is allowed.